Steve Mines at SUEZ Water UK explains why pH is critical to effective management of process water quality.

Many process applications depend on high purity water with a known pH value. Although this method of measuring the acidity or alkalinity of a solution has existed since 1909, it is often misunderstood by process engineers, and as a result, water purification systems are not always optimised for best performance or water quality, which in turn can lead to increased operating costs.

Many process applications depend on high purity water with a known pH value. Although this method of measuring the acidity or alkalinity of a solution has existed since 1909, it is often misunderstood by process engineers, and as a result, water purification systems are not always optimised for best performance or water quality, which in turn can lead to increased operating costs.

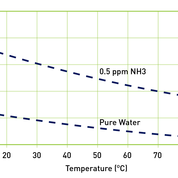

The term ‘pH’ is generally taken to be the abbreviated form of ‘the power (p) of the concentration of the hydrogen ion (H)’. The pH scale (0-14) is logarithmic, so a pH of 3 is ten times more acidic than a pH of 4 and one hundred times more acidic than a pH of 5. A solution with a pH less than 7 is defined as an acid, 7 is neutral, while a solution with a pH higher than 7 is an alkali. The pH of a solution is actually related to the activity of the hydrogen ions, rather than their concentration, and these activity levels are affected by changes in temperature.

pH and conductivity

Measuring the pH of water used in process applications can be one of the quickest methods of determining purity, and one of the most effective methods of measuring pH accurately is to capitalise on the ability of dissolved ions in a sample to conduct electricity.

Measuring the pH of water used in process applications can be one of the quickest methods of determining purity, and one of the most effective methods of measuring pH accurately is to capitalise on the ability of dissolved ions in a sample to conduct electricity.

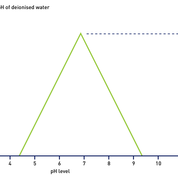

Conductivity readings, expressed as microSiemens per centimetre (µS/cm), are directly proportional to the concentration of ions, their charge and levels of activity or mobility. Indeed, the most mobile of the common ions in aqueous solutions are Hydrogen [H?] and Hydroxyl [OH?]; as a result, highly acidic or alkaline solutions will normally produce the highest conductivity readings. And as changes in temperature can significantly affect conductivity, conductivity measurements are internationally referenced to 25°C to allow for comparisons of different samples.

Resistivity, measured in units of MegOhm.centimetre (MO.cm), can also be used to give an accurate indication of pH. In practice, conductivity is used to measure water with a high concentration of ions, while resistivity is used to measure water with low levels of ions.

Measurements can be carried out using handheld conductivity or resistivity meters, connected to sensor probes that measure the concentration of hydrogen ions against a reference source, with a separate temperature reading taken to allow accurate calculation of pH value. For many process applications fixed, in-line systems are used, as they provide continuous monitoring, give higher levels of accuracy and facilitate process control.

How reliable is pH a measure of water purity?

Once a sample of purified water is drawn for testing, it is exposed to air and will absorb carbon dioxide that reacts with the water to form carbonic acid in solution, releasing conductive ions on disassociation. Just a few ppm of CO2 dissolved in a sample of ultrapure water can reduce the pH level to around 4.0, even though the resistivity of the water – and its purity – is still at 18.2 MO.cm.

Once a sample of purified water is drawn for testing, it is exposed to air and will absorb carbon dioxide that reacts with the water to form carbonic acid in solution, releasing conductive ions on disassociation. Just a few ppm of CO2 dissolved in a sample of ultrapure water can reduce the pH level to around 4.0, even though the resistivity of the water – and its purity – is still at 18.2 MO.cm.

As well as avoiding exposure to air, samples should not be stored before pH levels are measured, while the sample containers themselves should be clean and manufactured from materials that will not leach contaminants.

Sample contamination is one of the most common – and overlooked – causes of incorrect interpretation of pH measurements. This can often lead to an erroneous assumption that a change in pH readings between samples is indicative of a malfunction of the purification or process equipment.

Ideally, pH measurements should be made on closed, flowing samples using in-line instruments, though these will require regular recalibration as fouling, scaling or chemical poisoning can affect the accuracy of readings over time. For laboratory sampling, best practice is to take a portable instrument to the source and ensure that the probe is fully immersed at the bottom of the sample container, with the sample being allowed to overflow.

pH meters should also only be used in applications for which they have been developed; in particular, they are generally calibrated for samples containing high levels of contamination, which makes them unsuitable for measuring the quality of ultrapure water to any degree of accuracy unless they are fitted with specialised probes.

pH meters should also only be used in applications for which they have been developed; in particular, they are generally calibrated for samples containing high levels of contamination, which makes them unsuitable for measuring the quality of ultrapure water to any degree of accuracy unless they are fitted with specialised probes.

It is also important to maintain consistent sampling and testing procedures. For example, something as simple as a build-up of salts on probes, a fluctuation in sample temperatures, or a change in personnel or test laboratory, can quickly lead to inaccurate and inconsistent readings.

By comparison, conductivity probes offer a far more reliable method of determining water quality. These instruments are simple to use, less prone to the environmental effects that influence pH devices, and automatically compensate for fluctuations in temperature.

pH can be a valuable tool for effective process control in many different applications. Used and interpreted correctly it will give an accurate indication of water purity, helping to improve the efficiency, consistency and reliability of purification and treatment systems, which in turn can enhance levels of quality while reducing operating costs.